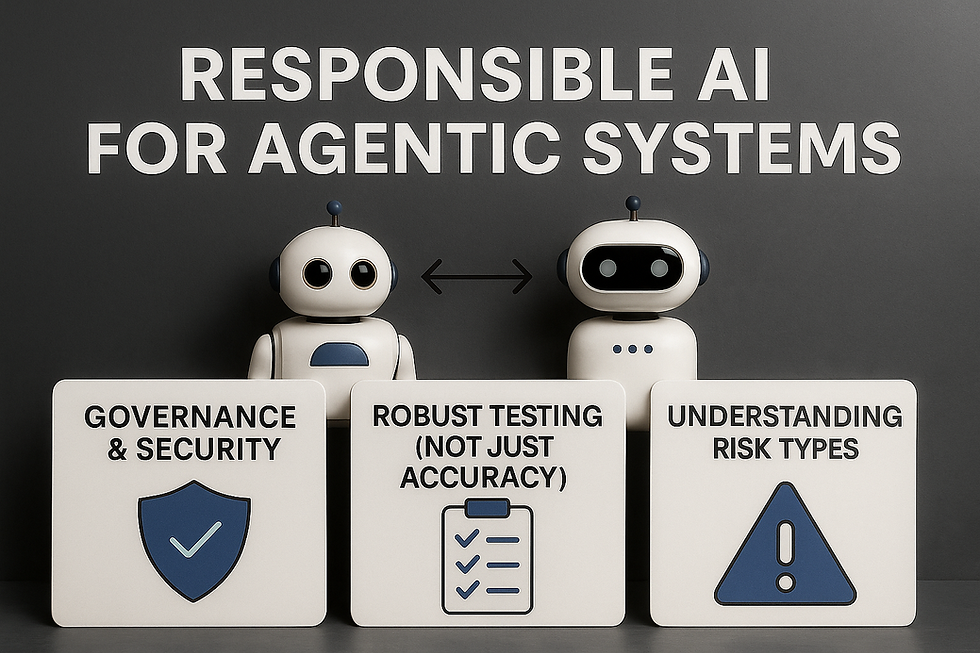

The 3 Pillars of Responsible Agentic AI

- Rich Thompson

- Jun 9, 2025

- 4 min read

AI systems today seamlessly collaborate, tackling complex challenges with ease, freeing us to focus on what truly matters.

But here’s the real question: What happens when these AI agents start communicating with each other

How do we, as leaders, ethically guide them, govern their actions, and take responsibility for the choices they make on our behalf?

The Evolving Definition of Responsible AI

People are increasingly prioritising responsible AI right from the outset, which is an encouraging development. This shift stems from the incredible potential agentic AI holds, alongside the challenges it may introduce.

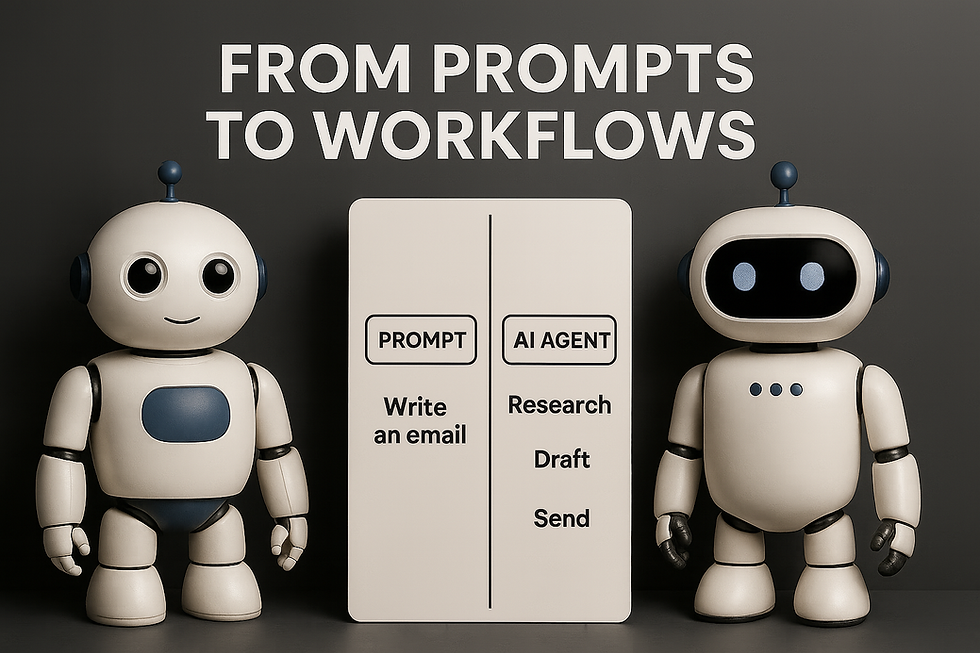

Personally, I believe the true value of such systems lies in their ability to autonomously complete tasks for us, rather than merely being conversational tools.

However, with these advanced capabilities comes an expanded risk, making it all the more crucial to approach their implementation thoughtfully.

Understanding Multi-Agentic Systems

The term "multi-agentic AI" might sound like futuristic jargon, but its practical application is already here and surprisingly straightforward:

Multi-agent systems are transforming how tasks are handled by breaking them down into smaller, manageable subtasks. The real advantage lies in the ability to specialise each agent for its specific subtask. By fine-tuning and rigorously testing each agent, we can ensure it excels in its role while implementing safeguards to keep it focused on its designated task. These agents can then coordinate and collaborate seamlessly to accomplish larger, more complex goals, creating a system that is both efficient and effective.

Think of it less as a chaotic hive mind and more like a well-organised assembly line, where each specialised agent performs its function reliably.

The Three Pillars of Governing Agentic AI

1. Security and Governance

Agents are essentially new entities within an organisation's digital ecosystem - not quite a user, not quite an application. They need to be governed as such.

One of the primary concerns with AI is security. As agents become more integrated into business processes, they have access to sensitive information and can potentially pose a threat if they are not properly secured. Therefore, businesses must prioritise implementing strong security measures to protect against data breaches.

Governance is also crucial in ensuring the responsible use of AI. Businesses need to establish clear guidelines and protocols for how agents should behave, what types of decisions they are allowed to make, and how they should handle sensitive information.

Organisations should apply the same robust security and access control principles to systems and devices as they do to human users.

2. Robust Testing

A common misconception about AI testing is that it solely focuses on evaluating accuracy.

While accuracy is undeniably important, other factors such as robustness—the ability to handle unexpected inputs or scenarios—are equally critical.

Drawing lessons from cybersecurity, AI testing should follow the same fundamental steps as traditional software testing but with specific modifications tailored to the unique challenges of AI.

A thorough AI testing process typically includes four key stages: unit testing, integration testing, system testing, and acceptance testing.

Unit Testing: This phase involves assessing individual components of the AI system to ensure they function correctly and deliver the expected results.

Integration Testing: This step verifies that these components work together seamlessly, ensuring smooth interactions within the system.

System Testing: Here, the entire AI system is evaluated in a simulated production environment to test its overall performance and reliability.

Acceptance Testing: Finally, end-users or stakeholders validate whether the AI system meets their needs, requirements, and expectations. This final step is crucial in ensuring the system aligns with its intended purpose and delivers real value.

Beyond functionality, incorporating ethical considerations into the AI testing process is essential. This includes evaluating the system’s ability to avoid harmful behaviours, such as prompt injections, bias, or the unauthorised generation of copyrighted material. By addressing these vulnerabilities, you can enhance both trust and reliability.

To build a robust AI system, testing should simulate real-world scenarios and edge cases to uncover weaknesses and identify areas for improvement. From task-specific accuracy to the detection of vulnerabilities, a comprehensive approach to testing ensures the system is not only effective but also resilient under diverse conditions.

3. Understanding the 3 types of Risks

Malfunctions: The AI does something it's not supposed to, like going off-task, producing harmful content, or leaking sensitive data.

Misuse: This can be unintentional (a user not understanding the AI's capabilities) or intentional (malicious actors). This is addressed through education, clear user interfaces, and security defences.

Systemic Risk: This involves the broader societal impact, such as the effect on the workforce. With agents poised to take over mundane tasks, there's a need to prepare and upskill employees for a new way of working.

Final Takeaway: Proceed with Intention

Approach Agentic AI with your eyes wide open. Understand the risks involved and take them seriously, because investing in responsible AI is essential.

Simply deploying systems without proper testing is a mistake we strongly advise against.

Instead, develop a clear, intentional plan for building and testing your AI system, and ensure that it aligns with your company's values and goals.

Remember, change takes time and effort. But by investing in responsible AI, we can pave the way for a brighter future where technology works for us, not against us. Don't be afraid of this powerful tool - embrace it with intention and responsibility.

Comments